I’m not really a photogenic guy but from time to time I enjoy saving memories of what surrounds me in the form of photos and videos, especially if I’m on a trip, could be my last visit for all I know.

A phone is usually enough for the duty but sometimes a dedicated device that I can solely use to capture images sounds like the way to go, so I have a couple of cameras: an action can (DJI Action 3) and a vlogging cam (DJI Osmo Pocket). They’re nice for the use case, given I’m not any kind of photo pro, I just want decently looking and stabilized images, the rest is pretty much accessory, or so I thought.

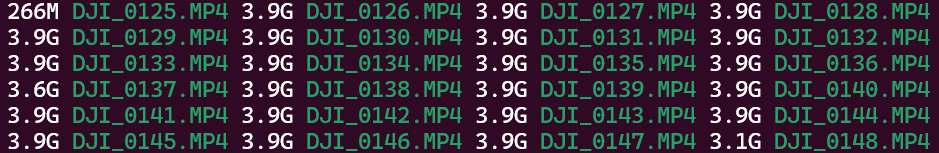

While on a trip, I rented a car and decided to record my way to some second-hand stores located on the outskirts of the city I was staying in, a good 90 minute road trip one-way and around the same for the return. When the moment arrived to transfer all the media to a safer storage medium, I got reminded every clip the cameras recorded was about 4GB in size for every 7 minutes. Didn’t care, it looked good.

Some weeks later, when wanting to transfer them to their final destination for multi-device offline viewing thanks to Immich, my home NAS, not only did they take quite some time to transfer, but I also noticed a couple of issues:

- As alluded to above, the cameras cut the recording when a single clip reaches about 4GB in size, DJI says they use FAT32 (which precisely presents this limitation), which explains this

- Given the settings I picked when recording (1080p 60 fps HQ), videos had a bitrate of around 80Mbps, or 80000Kbps if you will, which is more than double that of many Blu-ray conventional recordings and a little less that what could be found in 4K Blu-ray discs

- These two cameras have no built-in GPS, which is definitely not a problem for the majority of people and cases but given the nature of what I wanted to capture this time, I think it would’ve been a really nice to have

Let’s see…

FAT32

The first issue is not really that problematic, it may very well not even be an issue and instead more of a feature of this kind of device. The fact that DJI uses FAT32 and video files are capped at 4GB could come in handy when working with them and could even prevent corruption problems.

Transferring MPEG-4 files that are too big could be cumbersome. At more than 36GB per hour of video, that kind of transfer could take hours to complete between wireless devices and, it not being a video container format that plays along particularly well with errors and corruption makes it so that the process would have to be restarted when that happens, which is frankly tedious.

On the other hand, if errors occur during the recording or while storing the videos in the MicroSD cards, the fact that they are cut into smaller pieces favors the odds of recovery.

In a nutshell, may be inconvenient in a couple of aspects but no biggie. Next.

Bitrate

This is indeed a problem. Okay, not a problem per se but hear me out: I know the bigger the file the more information it holds inside and the better when the time to edit comes, no doubt, but I think in this case the size is not offering that much value.

With its 1/2.3-inch sensor, it’s not capturing that much light (comparatively, of course) at all and the added file size is translated to, I don’t know, noise? Still, having more information doesn’t hurt (or shouldn’t), but in this case I think I can get rid of a lot of bytes without sacrificing much image quality. At least that’s the reasoning.

With that in mind, a bitrate of 80000kbps is just too much for casual videos that we might want to share on social media or play on a phone from time to time to share with friends. It would be different had the goal been to play them often in a big TV, in that case I would’ve gone straight for 4K but I don’t have 20 MicroSD cards laying around to be able to keep and keep recording and not worrying.

So, given the problems their size presents to edit them, move them around or even play them, here’s where FFmpeg enters the game.

FFmpeg (pronounced /‘ɛfɛfɛm’pɛɡ/ 😉), helped me reducing the file sizes without a huge impact on image quality. These are the used parameters:

ffmpeg -hide_banner -loglevel error -hwaccel qsv -c:v h264_qsv -i {input} -c:v h264_qsv -global_quality 25 {output}

-hide_bannerprevents the banner, copyright related stuff and compilation options from being output as they’re generally not useful data-loglevel errorshows errors-hwaccel qsvmakes use of Intel Quick Sync Video hardware encoders which greatly accelerate the process-c:v h264_qsvspecifies the codec, h.264 for compatibility reasons-global_quality 25sets the quality level of the output file. The lower the number, the better the quality, so the idea is to have some sort of balance. I’ll expand on this later

Results are very promising, files are reduced to about 850MB from 4GB, savings of up to 80%.

Images are compressed to enhance the site’s performance. Original, full quality frames can be found here and here respectively.

But don’t blindly (heh) trust my or your eyes, there are image quality perception and comparison algorithms such as VMAF, developed by Netflix, or SSIM, developed by US universities, that can help us decide what are the best FFmpeg encoding parameters given our needs.

I chose SSIM because in order to use Netflix’ method (by the way, don’t let the opportunity of reading their blog pass by) I would’ve needed to complete a couple of prior steps, such as making sure the videos use the YUV color space, for example, which means encoding them first if that’s not the case. The laziness in me wins this time.

SSIM, the structural similarity index, works by simulating (or calculating) the perceived quality someone would have about an image or a set of them, including aspects like luminance and contrast. More than one way of calculating it exist, but I opted for FFmpeg because it comes built-in witht the binary.

ffmpeg.exe -i {input1} -i {input2} -lavfi ssim=stats_file={output} -f null -

This command produces a pretty long file where we can see the comparison between each frame of both videos, giving us a general value and partial values for each of the three components of YUV (maybe there wasn’t a need to convert the videos before using VMAF after all 😛).

Its structure is similar to this:

Doing a light statistic analysis of the results for both 24 and 25 as -global_quality parameters when issuing the FFmpeg command to reduce quality, 24 has a slight advantage, a margin that seems acceptable to me in favor of 25, which made me double down on the decision to pick 25 as the final number.

Next, some numbers courtesy of CalculatorSoup (looks like the free version of Wolfram Alpha couldn’t handle it) about how similar the processed videos are to the original one in average as well as the standard deviation in both cases. The higher the average and the lower the standard deviation (σ), the better.

Keeping in mind that, in general, an MSSIM value (mean SSIM) greater than or eaqual to 0.99 means two images are indistinguishable (Sec. 4.1 Luminance), the results appear to be satisfactory.

rule formulas “68-95-99.7 rule formulas”](https://cdn.inobtenio.com/img/posts/dji-bitrate-gps/68-95-99-rule_hu_7c9b1faeddf98c5c.webp)

On the one hand, with quality 24, the higher of the two, we have a mean of 0.9862 with an σ of 0.0084. This means that, following the formula above, ~95% of the time both videos have a similarity level between 96.94% and 100% and that more than 99% of the time that level is between 96.1% and 100%. Furthermore, they are in average 98.62% similar. Pretty nice.

On the other hand, with quality 25, the mean is 0.9844 with an σ of 0.0109, which means that ~95% of the time both videos have a similarity level between 96.26% and 100% and that more than 99% of the time that level is between 95.17% and 100%. Besides, they are in average 98.44% similar. Not too shabby.

That difference sounds like a fair price to pay for saving some bytes here and there.

Was all this numeric fest unnecessary? Most likely, but I really hoped I can get some numbers supporting or disproving my decision; happily, something closer to the former happened.

GPS

I realized the lack of geolocation info on the videos metadata when I added them to Immich, where a little map indicates where they were taken if said info is available. Good thing that it offers a built-in metadata editor, albeit basic, which I used to save the GPS coordinates of the point where each recording started; I had to watch them all and accurately locate them with the help of Google Maps.

Once geolocated, Immich created an external Exif file for each video, all of them with the same name but different extension: xmp.

So far so good, despite the Exif metadata being external in this case, having the same file name helps with some video players recognizing them automatically so it shouldn’t be a problem; however, I wanted all the data to be embedded in the videos themselves, that’s better and easier to manage.

So I used ExifTool to solve that:

exiftool -gpsposition -c "%.7f" {input}

-gpsposition -c "%.7f"extracts the GPS data in the “%.7f” format, which basically means an integer follow by 7 decimals (e.g. -31.8267316)

exiftool -TagsFromFile {input} -All:All -XMP -ExtractEmbedded -overwrite_original {output}

-TagsFromFiletakes the Exif tags stored in the files previously created by Immich-All:Alltakes into consideration all the tags it possibly can-XMPsame as above but with XMP tags, which tend to be non-standard metadata defined by the camera or editing software vendor

There was a tiny little additional problem, unfortunately: apparently, DJI cameras need to be connected to a smartphone or tablet via Bluetooth after having been disconnected for a long period of time in order to get the right date and time. This caused the timestamps in my videos to be off by around 90 minutes. I don’t know the exact amount of time because I didn’t have a solid reference besides a little video I took with my phone, so I estimate that they still might be off by about 3 minutes tops give or take. Sounds okay.

Naturally, I had to change the file timestamps but also the ones found in the Exif tags, given that I only applied the change to the files some video players would still be able to see the error because they sometimes prefer the metadata and not the what the filesystem says. Having everything correctly time-coded also helps with organization since they use time to build timelines.

Changing the files timestamps was easy. In Windows (PowerShell) we could do something like:

(Get-Item {input}).LastWriteTime = {new_date}

Don’t forget that new_date must have the correct format, in this case I opted for MM/dd/yyyy HH:mm because that worked on my Windows install.

(we’re close, promise)

Buuuut, and this is a big but, the intention was to subtract 90 minutes from the creation/modification datetime present on the files, so I needed to extract first. Good thing is that it’s not a destructive operation, so I left it for later once FFmpeg and ExifTool had done their job.

One little thing before having the complete script: when it comes to exif tags, at lest here in this case, the timezone was always set to UTC and the format yyyy-MM-dd HH:mm:ss, so that had to be taken into account, although not for changing the timestamps Windows sees.

Well, with all that in mind and a couple of extra details, the script would end up like this (now with a bash version for completeness):

$originals_path = {originals_path}

$processed_path = {processed_path}

Get-ChildItem $originals_path -Filter *.mp4 | Sort-Object -Property Name | Foreach-Object{

""

"Original file: $($_.Fullname)"

"Reducing video bitrate"

""

""

ffmpeg -hide_banner -loglevel error -hwaccel qsv -c:v h264_qsv -i $_.Fullname -c:v h264_qsv -global_quality 25 {original_file}

# qs stands for Quick Sync

$qs_file = (Get-Item "$processed_path\$($_.Basename).mp4")

"Bitrate-reduced file: $($qs_file.Fullname)"

""

# Get the current creation datetime (wrong creation date)

$wrongCreationDateTime = "{0:yyyy-MM-dd HH:mm}" -f $($_.CreationTime)

# Get the right creation datetime but in Windows format

$rightDateTimeWindowsFormatted = [datetime]::parseexact($wrongCreationDateTime, 'yyyy-MM-dd HH:mm', $null).AddMinutes(-90).toString('MM/dd/yyyy HH:mm')

# Get the right creation datetime but in Exif format (UTC with seconds)

$rightDateTimeExifUTCFormattedWithSeconds = [datetime]::parseexact($rightDateTimeWindowsFormatted, 'MM/dd/yyyy HH:mm', $null).AddMinutes(300).toString('yyyy:MM:dd HH:mm:00')

""

"Original file: $($_.Fullname)"

"QS file: $($qs_file.Fullname)"

"Wrong creation datetime: $wrongCreationDateTime"

"Right creation datetime in Windows format: $rightDateTimeWindowsFormatted"

"Right datetime in ExifTool UTC format with seconds: $rightDateTimeExifUTCFormattedWithSeconds"

""

"ExifTool copying all tags from original..."

exiftool -TagsFromFile $_.Fullname -All:All -XMP -ExtractEmbedded -overwrite_original $qs_file.Fullname

# Extracting coordinates info from XMP files

$coords = exiftool -gpsposition -c "%.7f" "$($qs_file.Fullname).xmp"

""

$coords

""

"ExifTool setting geoposition and updated timestaps..."

exiftool "-CreateDate=$rightDateTimeExifUTCFormattedWithSeconds" "-ModifyDate=$rightDateTimeExifUTCFormattedWithSeconds" "-Track*Date=$rightDateTimeExifUTCFormattedWithSeconds" "-Media*Date=$rightDateTimeExifUTCFormattedWithSeconds" -gpsposition="$coords" -overwrite_original $qs_file.Fullname

(Get-Item $qs_file).LastWriteTime = $rightDateTimeWindowsFormatted

""

""

"================================================================================================="

}

#!/bin/bash

$originals_path = {originals_path}

$processed_path = {processed_path}

for filename in `$originals_path/*.mp4`; do

echo

echo "Original file: $filename"

echo "Reducing video bitrate"

echo

echo

$qs_filename = $"$processed_path/$(basename "$filename")"

ffmpeg -hide_banner -loglevel error -hwaccel qsv -c:v h264_qsv -i $filename -c:v h264_qsv -global_quality 25 $qs_filename

echo "Bitrate-reduced file: $qs_filename"

echo

$wrong_datetime = $(date -r "$filename" +"%F %H:%M")

$right_datetime = $(date -d ""$wrong_datetime" 90 minutes" +"%F %H:%M")

$right_datetime_exif_utc_w_seconds = $(date -d ""$right_datetime" 300 minutes" +"%F %H:%M:%S")

echo

echo "Original file: $filename"

echo "QS file: $qs_filename"

echo "Wrong creation datetime: $wrong_datetime"

echo "Right datetime: $right_datetime"

echo "Right datetime in ExifTool UTC format with seconds: $right_datetime_exif_utc_w_seconds"

echo

echo "ExifTool copying all tags from original..."

exiftool -TagsFromFile $filename -All:All -XMP -ExtractEmbedded -overwrite_original $qs_filename

$coords = exiftool -gpsposition -c "%.7f" "$qs_filename.xmp"

echo

echo $coords

echo

echo "ExifTool setting geoposition and updated timestaps..."

exiftool "-CreateDate=$right_datetime_exif_utc_w_seconds" \

"-ModifyDate=$right_datetime_exif_utc_w_seconds" \

"-Track*Date=$right_datetime_exif_utc_w_seconds" \

"-Media*Date=$right_datetime_exif_utc_w_seconds" \

-gpsposition="$coords" -overwrite_original $qs_filename

touch -d "$right_datetime" $qs_filename

echo

echo

echo "================================================================================================="

done

Final thoughts

All of this left me with much lighter videos, properly tagged regarding where they were captured and with files with the right timestamps. We went from an outlook described by the cover image to this:

This way is easier to edit, transmit or even just play them.

I know the more information there is in a video, the more flexibility it will offer when being processed; after all, computers still can’t programagically invent information out of nothing, right? That’s why I may end up using the actual original clips to merge them and obtain a larger video on which only then apply all the processes learned here so that a reasonably-sized video is generated.

What’s important is that if we gained knowledge we didn’t waste time, although the time invested in manually locating every piece of video will hardly be recovered, unless I can somehow extrapolate the GPS data and generate a data-based route which would later let me create a real-time map animation to overlay on top of the video or something like that.

Who knows. 😉

Why not use Premiere, Resolve or even Movie Maker?

Well, I think using FFmpeg is not only more versatile but more fun when dealing with multiple files and when not knowing exactly what to do, and I’m all about having fun while solving problems up to a certain point.

Of course, it’s also more difficult than using Resolve, which I typically use, but I sometimes feel more at home in the terminal and I was going to use it anyways because of ExifTool.

Besides, we use FFmpeg in this house, it’s free and open source and lets you fully use your GPU for encoding if you want. Besides, it has a pretty big community.

I’ll use Resolve for color correction, I don’t think I want to deal with FFmpeg when using LUTs. 😔

For some day… hopefully

- Improve the code, it can surely be improved, especially if we can prevent ExifTool from being called twice, given it’s a destructive operation and it consumes quite some time. Also can be a bit more elegant

- Color correct the video

- Remember to connect the cameras to my phone the next time I want to record something